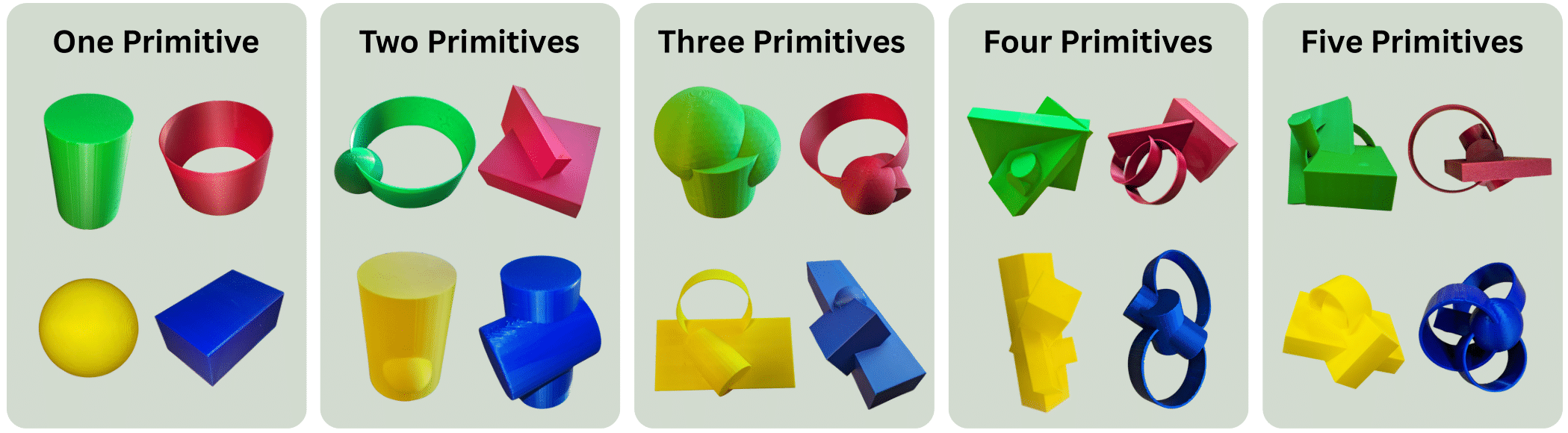

Grasping Demonstrations for Randomized Toys (Training Set)

Zero-Shot Grasping on Real World Objects (Evaluation Set)

Real-World Franka DROID Grasping Results

Results of zero-shot grasping in the real-world Franka DROID setting, on 64 objects from the YCB Object Benchmark. Models tuned on toys are trained with a total of 1500 grasping demonstrations across 250 toys. During evaluation, each YCB object is tested 16 times across a predefined 4x4 grid, and results are averaged to get the final success rate. LEGO outperforms large-scale robotic models such as zero-shot π₀-FAST and OpenVLA, and ShapeGrasp, which uses a pre-trained LLM for choosing grasp points, achieving an overall success rate of 67%.Real-World H1-2 + Dexterous Hands Grasping Results

Results of zero-shot grasping in the real-world H1-2 humanoid with dexterous hands setting, on 13 real-world objects. Models were tuned on a total of 500 grasping demonstrations across 250 toys. During evaluation, each object was tested 5 times across a predefined grid, and results were averaged to get the final success rate. LEGO outperforms both large-scale VLA models tested, π₀-FAST and OpenVLA, achieving an overall success rate of 51%.Scaling Ablations

We perform an ablation study in simulation to examine how both the number of unique toys in the training set and the number of grasping demonstrations influence performance. Specifically, we construct six object sets containing 1, 25, 125, 250, 500, and 1000 unique toys, respectively. For each set, we collect 2,500 grasping demonstrations and train our model using varying numbers of demonstrations per set. The results, shown in the left panel of the figure below, indicate that increasing the number of unique objects improves performance, but with diminishing returns. In contrast, the number of demonstrations has a stronger impact on learning generalizable grasping. The right panel of the figure illustrates the results of an ablation study on the size of the model's transformer policy, where we find ViT-B (86M parameters) to achieve the best overall performance.